Processing and Queuing Delays

Processing

Introduction of Processing Delay The Internet has evolved from a simple store-and-forward medium to a complex communications infrastructure. Routers on a network need to implement a variety of functions to increase in number and complexity, take more time, and experience significant delays in packets. In network simulation, not answering is not answering because it is ignored. However, failing to pay for this network can achieve long-distance propagation delays. This is thus a significant contributor to total packet delays. Different network applications and develop models that characterize packet costs with only a few parameters that can easily be obtained from a simulation. Because of this there is an increase in performance and functionality.

To increase functionality and increased performance, router designs have changed from programmable ASIC forwarding machines. In fact, software programmable "network processors" (NPs) have been developed in recent years. These NPs are typically single chip multiprocessors with high performance I / O components. Network processors are usually located at each router input port. Packet assignments are performed on the network before the packet is passed through the router switching fabric and subsequent network links. Due to the complexity of the reliability, the router takes more time to initiate packets. The performance achieved by processing multiple packets in parallel on a collection of processor cores. This increases the overall router throughput and supports link link upgrades. Individual packages, introductions, cancellations fail because they are not at the core. Along with the complexity of packet handling, this makes network node failures even more important.

To illustrate the calculated impact or "correct cost", we discussed the fact that absences contributed to total package suspension. When submitting packets from one node to another, the following delays occur: - Suspension of transmission (the time it takes to send a packet to the cable), - Propagation delay (the time it takes to transmit a packet via cable), - Ignore (the time it takes for packets on a network system), - Queue delay (time packets are buffered before they can be sent).

*Packet Processing Simulation* Simulated four different network processing applications using PacketBench, which range from simple forwarding to complex packet payload modifications. The simulations were performed on a set of actual network packet traces from both a LAN and the Internet. The specific applications are: - IPv4-radix

IPv4-radix is an application that performs RFC1812 compliant packet forwarding and uses a radix tree structure to store entries of the routing table. The routing table is accessed to find the interface to which the packet must be sent, depending on its destination IP address.

-

IPv4-trie

IPv4-trie is similar to IPv4-radix and also performs RFC1812-based packet forwarding. This implementation uses a trie structure with combined level and path compression for the routing table lookup.

-

Flow Classification

Flow classification is a common part of various applications such as firewalling, NAT, and network monitoring. The packets passing through the network processor are classified into flows which are defined by a 5 tuple consisting of the IP source and destination addresses, source and destination port numbers, and transport protocol identifier.

-

IPSec Encryption.

IPSec Encryption is an implementation of the IP Security Protocol, where the packet payload is encrypted using the 3DES (Triple-DES) algorithm. This algorithm is used in most commercial broadband VPN routers. This is the only application where the packet payload is read and modified.

*Packet Processing Instructions* We use a Package Processing Instructions model that describes processing costs as a function of several parameters. With this model we use two parameters, αa and βa, which are specific to each network processing application a:

• Per-Packet Processing Fee αa. This parameter reflects instructions that need to be processed for each package regardless of size.

• Per-Byte Processing Fee βa. This parameter reflects processing fee which depends on package size. Total instructions processed, ia, l, by an application a for packages with length l then can be approached with ia (l) = αa + βa · l.

*Conclusion* In packet switching based networks, processing delay is the time it takes for the router to process the packet header. Processing delay is a key component in network delay. During packet processing, the router can check for bit rate errors in the packet that occurred during transmission and determine where to go next. The processing delay in high-speed routers is usually in the order of microseconds or less. After this nodal processing, the router redirects the packet to the queue where further delays can occur (queue delay). In the past processing delays have been neglected as they are insignificant compared to other forms of network delay. However, in some systems the processing delay can be enormous especially where routers perform complex encryption algorithms and inspect or modify packet contents. In-depth packet inspections performed by some networks inspect packet content for security, legal or other reasons, which can cause enormous delays and are thus performed only at selected inspection points. Routers that perform network address translation also have higher than normal processing delays because they need to inspect and modify incoming and outgoing packets.

Source : With Characterizing Network Processing Delay

Queue Delay

Queuing delay is an event that occurs when a packet is waiting to be transmitted. This event usually happens when several packets with different sizes are transmitted in the same period of time. In this section, several things will be explained like the factors affecting the queue delay time, also the formulation about queue delay.

Factors Affecting Queue Delay Time

There are two main factors that will affect the queue time, there are the rate at which packet arrives in the queue and the transmission rate of the link. Let’s say a is the average rate of packets arrive in the queue (packet/sec), R is the transmission rate at which packet bits are transmitted from the queue (bits/sec), and suppose L is the size of the packet in bits, it’ll have La/R as the traffic intensity which determine the extent of the queue delay in seconds.

Must not La/R>1

There is one golden rule in traffic engineering: “design your system so that the traffic intensity is no greater than one”, what will happen if the value of traffic intensity is greater than one? Suppose La/R>1, which means the rate of the packet arriving to the queue is greater than the transmission rate to transmit (dequeue) all remaining packets, which leading to constantly increasing queue delay and will result to infinite delay time due to the unfinished and never ending queue of incoming packets unless there are no more packets being sent to the queue.

How about La/R=<1?

In this case there will be only one factor affecting the queue time, which is how the packet arrives to the queue whether periodically or in bursts. If the packets arrive periodically, let’s say value of a is one, then each packet will arrive periodically at L/R seconds, which means each packet will arrive in an empty queue so there will be no queue delay. However, if the packets arrive in bursts, let’s say N is the packets arrive at the same time, then we can say each packet arrives at N(L/R) seconds. The first packet arrive will have no queue delay, the second packet arrive will have (L/R) seconds delay, third packet will have 2*(L/R), we can conclude that the n-th packet transmitted will have (n-1)(L/R) seconds.

Source is from archive.is

Example

Processing Delay

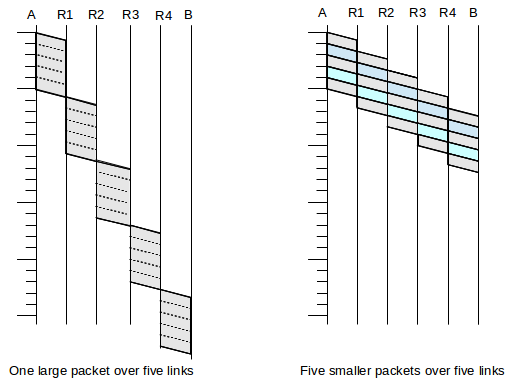

If store-and-forward switches are involved, smaller packets have much better throughput. As an example of this, consider a path from A to B with four switches and five links:

A───────R1───────R2───────R3───────R4───────B

Suppose we send either one big packet or five smaller packets. The relative times from A to B are illustrated in the following figure:

One large packet over five links vs. Five smaller packets over five links

The point is that we can take advantage of parallelism: while the R4–B link above is handling packet 1, the R3–R4 link is handling packet 2 and the R2–R3 link is handling packet 3 and so on. The five smaller packets would have five times the header capacity, but as long as headers are small relative to the data, this is not a significant issue.

Source: An Introduction to Computer Networks

Queuing Delay

Assume a constant transmission rate of R = 1300000 bps, a constant packet-length L = 2800 bits, and average rate of packet/second a = 26. What is the queuing delay in milliseconds?

Queuing Delay:

= (La/R) * (L/R) * (1 - (La/R)) * 1000

= (0.056) * (2800 / 1300000) * (1 - 0.056) * 1000

= 0.1139 ms